Kaggle

Analyze any computer vision datasets from Kaggle.

Sign Up

To load any dataset from Kaggle you first need to sign-up for an account. It's free.

On Kaggle, you can browse for a dataset of interest and manually download it on your machine.

Kaggle API

Alternatively, you can use the Kaggle API to programmatically download any dataset using Python.

To install the Kaggle API run

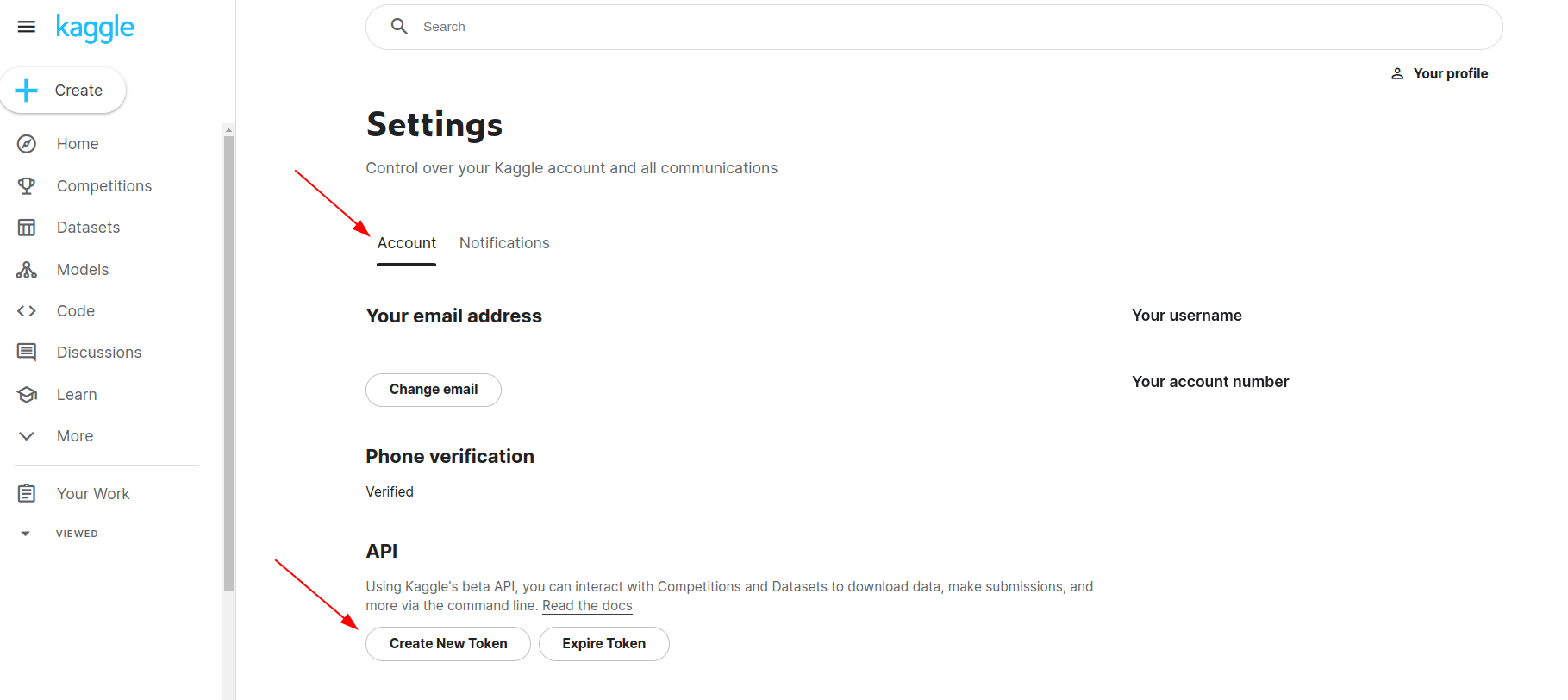

pip install -Uq kaggleAfter signing up for an account Kaggle account, head over to the 'Account' tab and select 'Create API Token'. This will trigger the download of kaggle.json, a file containing your API credentials.

Place this file in the location ~/.kaggle/kaggle.json (on Windows in the location C:\Users\<Windows-username>\.kaggle\kaggle.json. Read more here.

If the setup is done correctly, you should be able to run the Kaggle commands on your terminal. For instance, to list Kaggle datasets that have the term "computer vision", run

kaggle datasets list -s "computer vision"ref title size lastUpdated downloadCount voteCount usabilityRating

------------------------------------------------------------ -------------------------------------------------- ----- ------------------- ------------- --------- ---------------

jeffheaton/iris-computer-vision Iris Computer Vision 5MB 2020-11-24 21:23:29 1415 20 0.875

bhavikardeshna/visual-question-answering-computer-vision-nlp Visual Question Answering- Computer Vision & NLP 411MB 2022-06-14 04:32:28 421 37 0.8235294

sanikamal/horses-or-humans-dataset Horses Or Humans Dataset 307MB 2019-04-24 20:09:38 8405 120 0.875

phylake1337/fire-dataset FIRE Dataset 387MB 2020-02-25 16:45:29 12098 180 0.875

fedesoriano/cifar100 CIFAR-100 Python 161MB 2020-12-26 08:37:10 4881 116 1.0

fedesoriano/chinese-mnist-digit-recognizer Chinese MNIST in CSV - Digit Recognizer 8MB 2021-06-08 12:15:47 966 45 1.0

bulentsiyah/opencv-samples-images OpenCV samples (Images) 13MB 2020-05-19 14:36:01 2374 72 0.75

jeffheaton/traveling-salesman-computer-vision Traveling Salesman Computer Vision 3GB 2022-04-20 01:13:17 183 22 0.875

sanikamal/rock-paper-scissors-dataset Rock Paper Scissors Dataset 452MB 2019-04-24 19:53:04 4556 78 0.875

muratkokludataset/dry-bean-dataset Dry Bean Dataset 5MB 2022-04-02 23:19:30 2303 1464 0.9375

juniorbueno/opencv-facial-recognition-lbph OpenCV - Facial Recognition - LBPH 6MB 2021-12-01 10:47:12 487 45 0.875

rickyjli/chinese-fine-art Chinese Fine Art 323MB 2020-05-02 03:00:40 821 38 0.8235294

mpwolke/cusersmarildownloadsmondrianpng Computer Vision. C'est Audacieux, Luxueux, Chic! 417KB 2022-04-10 21:41:35 10 20 1.0

paultimothymooney/cvpr-2019-papers CVPR 2019 Papers 5GB 2019-06-16 18:28:50 934 50 0.875

emirhanai/human-action-detection-artificial-intelligence Human Action Detection - Artificial Intelligence 147MB 2022-04-22 21:07:24 1468 40 1.0

vencerlanz09/plastic-paper-garbage-bag-synthetic-images Plastic - Paper - Garbage Bag Synthetic Images 451MB 2022-08-26 09:57:18 1127 76 0.875

shaunthesheep/microsoft-catsvsdogs-dataset Cats-vs-Dogs 788MB 2020-03-12 05:34:30 27897 345 0.875

birdy654/cifake-real-and-ai-generated-synthetic-images CIFAKE: Real and AI-Generated Synthetic Images 105MB 2023-03-28 16:00:29 1702 44 0.875

ryanholbrook/computer-vision-resources Computer Vision Resources 13MB 2020-07-23 10:40:17 2491 11 0.1764706

fedesoriano/qmnist-the-extended-mnist-dataset-120k-images QMNIST - The Extended MNIST Dataset (120k images) 19MB 2021-07-24 15:31:01 844 29 1.0 See more commands here.

Optionally, you can also browse the Kaggle webpage to see the dataset you're interested to download.

Download Dataset

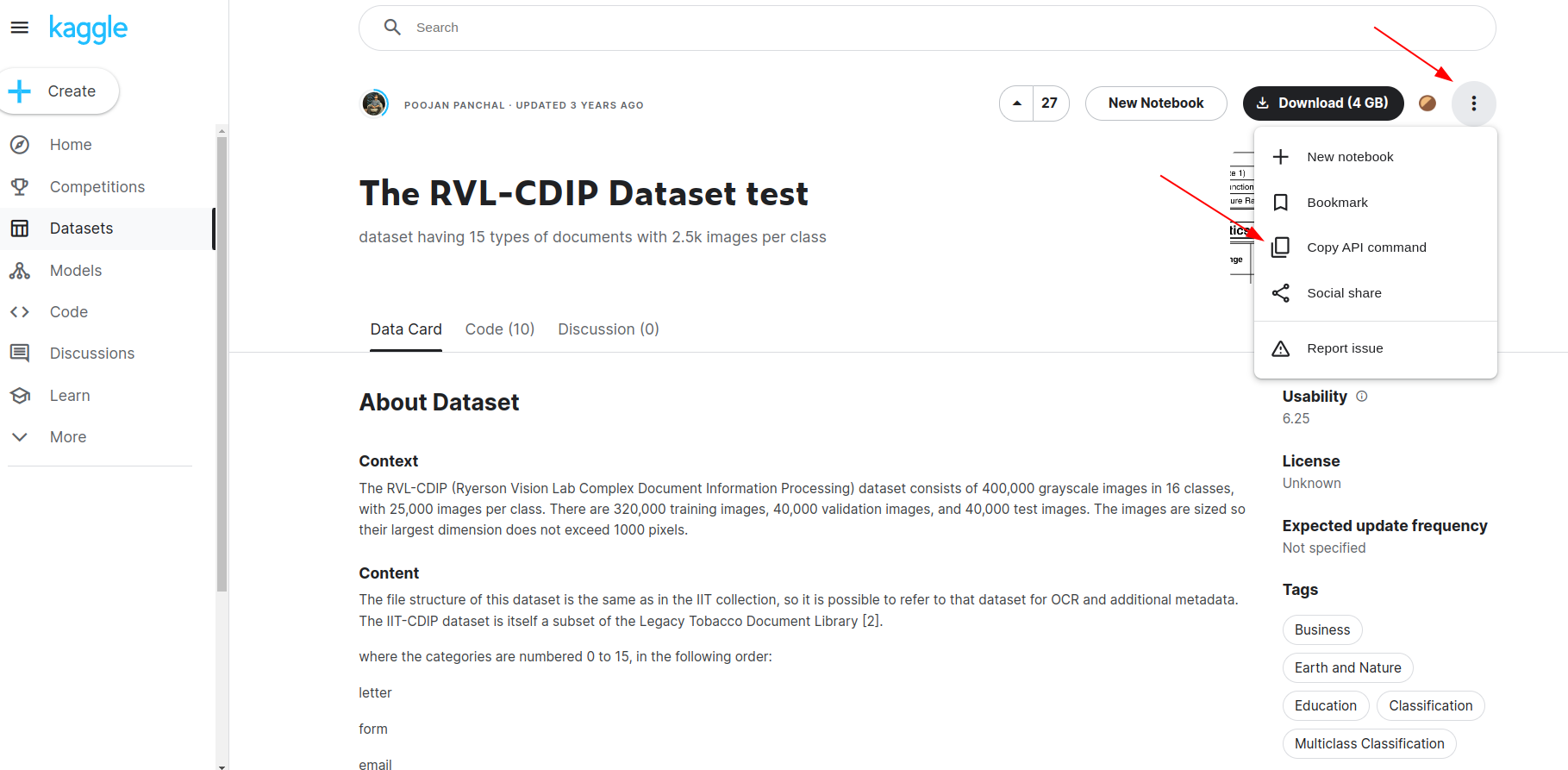

Let's say we're interested in analyzing the RVL-CDIP Test Dataset.

You can head to the dataset page click on the 'Copy API command' button and paste it into your terminal.

kaggle datasets download -d pdavpoojan/the-rvlcdip-dataset-testOnce done, we should have a the-rvlcdip-dataset-test.zip in the current directory.

Let's unzip the file for further analysis with fastdup in the next section.

unzip -q the-rvlcdip-dataset-test.zipOnce completed, we should have a folder with the name test/ which contains all the images from the dataset.

Install fastdup

Now that we have our dataset in place, let's install fastdup.

pip install fastdupNow, test the installation by printing the version. If there's no error message, we are ready to go.

import fastdup

fastdup.__version__'1.36'Load Annotations

InfoThis step is optional. fastdup works with both labeled and unlabeled datasets.

If you decide not to load the annotations you can simply run fastdup with just the following codes.

import fastdup fd = fastdup.create(input_dir="IMAGE_FOLDER/") fd.run()

Although you can run fasdup without the annotations, specifying the labels lets us do more analysis with fastdup such as inspecting mislabels.

Since the dataset is labeled, let's make use of the labels and feed them into fastdup.

fastdup expects the labels to be formatted into a Pandas DataFrame with the columns filename and label.

Let's loop over the directory recursively search for the filenames and labels, and format them into a DataFrame.

import glob

import os

import pandas as pd

# Define the path

path = "test/"

# Define patterns for tif image found in the dataset

patterns = ['*tif']

# Use glob to get all image filenames for both extensions

filenames = [f for pattern in patterns for f in glob.glob(path + '**/' + pattern, recursive=True)]

# Extract the parent folder name for each filename

label = [os.path.basename(os.path.dirname(filename)) for filename in filenames]

# Convert to a pandas DataFrame and add the title label column

df = pd.DataFrame({

'filename': filenames,

'label': label

})df.head()| filename | label | |

|---|---|---|

| 0 | test/advertisement/12636110.tif | advertisement |

| 1 | test/advertisement/926916.tif | advertisement |

| 2 | test/advertisement/502599726+-9726.tif | advertisement |

| 3 | test/advertisement/509132392+-2392.tif | advertisement |

| 4 | test/advertisement/12888045.tif | advertisement |

Run fastdup

To fastdup with the annotations DataFrame, let's point the input_dir to the image folders and annotations to df DataFrame.

fd = fastdup.create(input_dir='test')

fd.run(annotations=df)Now sit back and relax as fastdup analyzes the dataset.

Broken Images

Let's inspect the dataset to find if we have any broken images.

fd.invalid_instances()| filename | label | index | error_code | is_valid | fd_index | |

|---|---|---|---|---|---|---|

| 18039 | test/scientific_publication/2500126531_2500126536.tif | scientific_publication | 18039 | ERROR_CORRUPT_IMAGE | False | 18039 |

Duplicates

Let's visualize the duplicates in a gallery.

To get a detailed DataFrame on the duplicates/near-duplicate found, use the similaritymethod.

similarity_df = fd.similarity()

similarity_df.head()| from | to | distance | filename_from | label_from | index_x | error_code_from | is_valid_from | fd_index_from | filename_to | label_to | index_y | error_code_to | is_valid_to | fd_index_to | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 5323 | 17276 | 1.0 | test/resume/0001489550.tif | resume | 5323 | VALID | True | 5323 | test/memo/0001461863.tif | memo | 17276 | VALID | True | 17276 |

| 1 | 21188 | 2189 | 1.0 | test/scientific_report/2056457981.tif | scientific_report | 21188 | VALID | True | 21188 | test/advertisement/91572245_91572246.tif | advertisement | 2189 | VALID | True | 2189 |

| 2 | 2358 | 1353 | 1.0 | test/advertisement/2072281170.tif | advertisement | 2358 | VALID | True | 2358 | test/advertisement/2073352207.tif | advertisement | 1353 | VALID | True | 1353 |

| 3 | 26877 | 1353 | 1.0 | test/news_article/2083785419.tif | news_article | 26877 | VALID | True | 26877 | test/advertisement/2073352207.tif | advertisement | 1353 | VALID | True | 1353 |

| 4 | 20715 | 1353 | 1.0 | test/scientific_report/2505149213.tif | scientific_report | 20715 | VALID | True | 20715 | test/advertisement/2073352207.tif | advertisement | 1353 | VALID | True | 1353 |

We can get the number of duplicates/near-duplicates by filtering them on the distance score. A distance of 1.0 is an exact copy, and vice versa.

near_duplicates = similarity_df[similarity_df["distance"] >= 0.99]

near_duplicates = near_duplicates[["distance","filename_from", "filename_to", "label_from", "label_to"]]

len(near_duplicates)1392Slice the DataFrame to view related columns.

near_duplicates.head()| distance | filename_from | filename_to | label_from | label_to |

|---|---|---|---|---|

| 1.0 | test/resume/0001489550.tif | test/memo/0001461863.tif | resume | memo |

| 1.0 | test/scientific_report/2056457981.tif | test/advertisement/91572245_91572246.tif | scientific_report | advertisement |

| 1.0 | test/advertisement/2072281170.tif | test/advertisement/2073352207.tif | advertisement | advertisement |

| 1.0 | test/news_article/2083785419.tif | test/advertisement/2073352207.tif | news_article | advertisement |

| 1.0 | test/scientific_report/2505149213.tif | test/advertisement/2073352207.tif | scientific_report | advertisement |

TipThat's a lot of (1392) duplicates! Not cool for a test dataset. Using fastdup we just conveniently surfaced these duplicates for further action.

Typically, we'd just remove these duplicates from the dataset as they do not add value. But we will leave this step to you as the data curator.

Image Clusters

fastdup also includes a gallery to view image clusters.

fd.vis.component_gallery()

TipThe components gallery gives a bird's eye view of how similar images exists in your dataset as clusters.

Statistical Gallery

View the dataset from a statistical point of view to show bright/dark/blurry images from the dataset.

fd.vis.stats_gallery(metric='bright')

TipNot all bright/dark blurry images are useful. In this dataset, we found documents that are totally black or white. We'll leave it to you to decide whether these images are useful.

View DataFrame with image statistics.

fd.img_stats().head()| index | img_w | img_h | unique | blur | mean | min | max | stdv | file_size | contrast | filename | label | error_code | is_valid | fd_index |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 762 | 1000 | 255 | 21070.7559 | 229.3038 | 0.0 | 255.0 | 72.2897 | 106922 | 1.0 | test/advertisement/12636110.tif | advertisement | VALID | True | 0 |

| 1 | 762 | 1000 | 256 | 12831.0820 | 229.3935 | 0.0 | 255.0 | 74.0765 | 62048 | 1.0 | test/advertisement/926916.tif | advertisement | VALID | True | 1 |

| 2 | 754 | 1000 | 256 | 41271.8359 | 196.6943 | 0.0 | 255.0 | 69.2706 | 589350 | 1.0 | test/advertisement/502599726+-9726.tif | advertisement | VALID | True | 2 |

| 3 | 754 | 1000 | 256 | 15565.9248 | 243.6986 | 0.0 | 255.0 | 46.8760 | 73854 | 1.0 | test/advertisement/509132392+-2392.tif | advertisement | VALID | True | 3 |

| 4 | 762 | 1000 | 256 | 11803.9893 | 247.4764 | 0.0 | 255.0 | 39.0911 | 54344 | 1.0 | test/advertisement/12888045.tif | advertisement | VALID | True | 4 |

Mislabels

Since we ran fastdup with labels, we can inspect for potential mislabels. Let's first visualize it via the similarity gallery.

fd.vis.similarity_gallery()

TipIn the similarity gallery fastdup surfaces the images that are visually similar to one another yet has different labels.

Wrap Up

That's it! We've just conveniently surfaced many issues with this dataset by running fastdup. By taking care of dataset quality issues, we hope this will help you train better models.

Questions about this tutorial? Reach out to us on our Slack channel!

VL Profiler - A faster and easier way to diagnose and visualize dataset issues

The team behind fastdup also recently launched VL Profiler, a no-code cloud-based platform that lets you leverage fastdup in the browser.

VL Profiler lets you find:

- Duplicates/near-duplicates.

- Outliers.

- Mislabels.

- Non-useful images.

Here's a highlight of the issues found in the RVL-CDIP test dataset on the VL Profiler.

Free UsageUse VL Profiler for free to analyze issues on your dataset with up to 1,000,000 images.

Not convinced yet?

Interact with a collection of dataset like ImageNet-21K, COCO, and DeepFashion here.

No sign-ups needed.

Updated 7 months ago