Metadata Enrichment with Zero-Shot Segmentation Models

Enrich your visual data with zero-shot segmentations models such as Segment Anything and more.

This notebook is Part 3 of the dataset enrichment notebook series where we utilize various zero-shot models to enrich datasets.

- Part 1 - Dataset Enrichment with Zero-Shot Classification Models

- Part 2 - Dataset Enrichment with Zero-Shot Detection Models

- Part 3 - Dataset Enrichment with Zero-Shot Segmentation Models

If you haven't checked out Part 1 and Part 2 we highly encourage you to go through them first before proceeding with this notebook.

PurposeThis notebook shows how you can enrich the metadata of your visual dataset using open-source zero-shot models image segmentation model Segment Anything (SAM).

By the end of this notebook, you'll learn how to:

- Install and load the SAM in fastdup.

- Enrich your dataset using masks generated by the SAM model.

- Run inference using SAM on a single image.

Installation

First, let's install the necessary packages:

- fastdup - To analyze issues in the dataset.

- Segment Anything Model - To use the SAM model.

- gdown - To download demo data hosted on Google Drive.

Run the following to install all the above packages.

!pip install -Uq fastdup git+https://github.com/facebookresearch/segment-anything.git gdownTest the installation. If there's no error message, we are ready to go.

import fastdup

fastdup.__version__'1.57'

CUDA Runtimefastdup runs perfectly on CPUs, but larger models like SAM runs much slower on CPU compared to GPU.

This codes in this notebook can be run on CPU or GPU.

But, we highly recommend running in CUDA-enabled environment to reduce the run time. Running this notebook in Google Colab or Kaggle is a good start!

Download Dataset

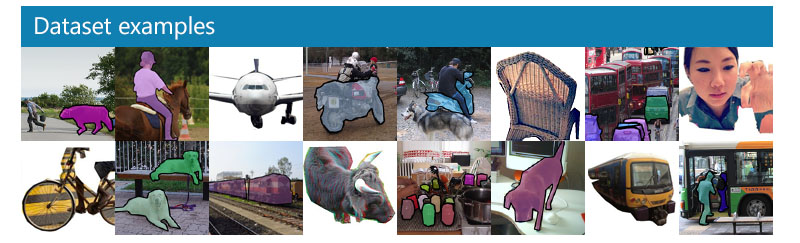

Download the coco-minitrain dataset - A curated mini-training set consisting of 20% of COCO 2017 training dataset. The coco-minitrain consists of 25,000 images and annotations.

First, let's load the dataset from the coco-minitrain dataset.

gdown --fuzzy https://drive.google.com/file/d/1iSXVTlkV1_DhdYpVDqsjlT4NJFQ7OkyK/view

unzip -qq coco_minitrain_25k.zipZero-Shot Segmentation with SAM

In addition to the zero-shot recognition and detection modes, fastdup also supports zero-shot segmentation using the Segment Anything Model (SAM) from MetaAI.

SAM produces high-quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image.

1. Inference on a bulk of images

In Part 2 of the enrichment notebook series, we utilized Grounding DINO as a zero-shot detection model and ran an inference over the images in our dataset.

We ended up with a DataFrame consisting of filename,ram_tags, grounding_dino_bboxes, grounding_dino_scores and grounding_dino_labels column as follows.

| filename | ram_tags | grounding_dino_bboxes | grounding_dino_scores | grounding_dino_labels | |

|---|---|---|---|---|---|

| 0 | coco_minitrain_25k/images/val2017/000000382734.jpg | bath . bathroom . doorway . drain . floor . glass door . room . screen door . shower . white | [(94.36, 479.79, 236.6, 589.37), (4.92, 3.73, 475.19, 637.36), (95.94, 514.92, 376.53, 638.46), (41.91, 37.47, 425.01, 637.09), (115.27, 602.26, 164.17, 635.21)] | [0.5789, 0.3895, 0.4444, 0.3018, 0.3601] | [bath, bathroom, floor, glass door, drain] |

| 1 | coco_minitrain_25k/images/val2017/000000508730.jpg | baby . bathroom . bathroom accessory . bin . boy . brush . chair . child . comb . diaper . hair . hairbrush . play . potty . sit . stool . tile wall . toddler . toilet bowl . toilet seat . toy | [(3.58, 2.77, 635.13, 475.62), (30.91, 104.91, 301.75, 476.29), (68.59, 105.02, 266.22, 267.8), (359.26, 116.82, 576.6, 475.9), (374.37, 116.77, 557.19, 254.07), (466.9, 0.71, 638.7, 117.05), (266.95, 433.87, 291.04, 476.78), (466.53, 349.26, 525.87, 405.73), (350.62, 272.66, 571.98, 476.46)] | [0.5898, 0.3738, 0.3679, 0.3641, 0.362, 0.3482, 0.3804, 0.3755, 0.3742] | [bathroom, toddler, hair, toddler, hair, bathroom accessory, hairbrush, diaper, chair] |

| 2 | coco_minitrain_25k/images/val2017/000000202339.jpg | bus . bus station . business suit . carry . catch . city bus . pillar . man . shopping bag . sign . suit . tie . tour bus . walk | [(73.28, 256.74, 135.63, 374.42), (103.53, 105.23, 267.7, 410.18), (98.31, 33.85, 271.8, 434.72), (203.78, 63.88, 463.32, 298.29), (147.5, 106.62, 163.49, 172.9), (164.1, 52.93, 272.88, 152.68), (0.49, 0.76, 82.86, 333.41), (1.96, 2.22, 477.75, 636.07), (398.15, 281.2, 479.01, 545.03), (147.02, 106.66, 163.66, 227.86), (400.67, 98.89, 476.48, 318.45), (165.71, 52.9, 372.94, 185.69)] | [0.5325, 0.4582, 0.4429, 0.4012, 0.365, 0.3587, 0.3338, 0.3322, 0.3212, 0.3168, 0.3056, 0.2986] | [man, bus, shopping bag, bus station, business suit, city bus, pillar, sign, tour bus, carry, catch, walk] |

If you'd like to reproduce the above DataFrame, Part 2 notebook details the code you need to run.

Similar to all previous examples, you can use the enrich method to add masks to your DataFrame of images.

In the following code snippet, we load the SAM model and specify input_col='grounding_dino_bboxes' to allow SAM to use the bounding boxes as inputs.

fd = fastdup.create(input_dir='./coco_minitrain_25k')

df = fd.enrich(task='zero-shot-segmentation',

model='segment-anything',

input_df=df,

input_col='grounding_dino_bboxes'

)This creates a new column in the DataFrame named 'sam_masks'which contains the instance segmentation masks for each bounding box.

More onfd.enrichEnriches an input

DataFrameby applying a specified model to perform a specific task.Currently supports the following parameters:

Parameter

Type

Description

Optional

Default

taskstr

The task to be performed.

Supports

"zero-shot-classification",

"zero-shot-detection"or

"zero-shot-segmentation"as argument.

No

-

modelstr

The model to be used.

Supports

"segment-anything"if

task=='zero-shot-segmentation'.

No

-

input_dfDataFrame

The Pandas

DataFramecontaining the data to be enriched.

No

-

input_colstr

The name of the column in

input_dfto be used as input for the model.

No

-

num_rowsint

Number of rows from the top of

input_dfto be processed.

If not specified, all rows are processed.

Yes

None

devicestr

The device used to run inference.

Supports

'cpu'or

'cuda'as argument.

Defaults to available devices if not specified.

Yes

None

To verify the results, let's plot the images with bounding boxes and masks.

from fastdup.models_utils import plot_annotations

plot_annotations(df,

image_col='filename',

tags_col='ram_tags',

bbox_col='grounding_dino_bboxes',

scores_col='grounding_dino_scores',

labels_col='grounding_dino_labels',

masks_col='sam_masks'

)

2. Inference on a single image

To run an inference using the SAM model, import the SegmentAnythingModel class and provide an image-bounding box pair as the input.

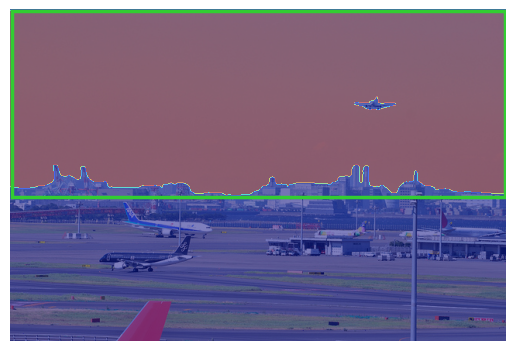

Let's suppose we'd like to run an inference on the following image.

from IPython.display import Image

Image("coco_minitrain_25k/images/val2017/000000449996.jpg")

Call the run_inferencemethod and provide the image and bounding box as the input argument.

The value torch.tensor((1.47, 1.45, 638.46, 241.37))) is a bounding box of the sky for the image above.

from fastdup.models_sam import SegmentAnythingModel

import torch

model = SegmentAnythingModel()

result = model.run_inference(image_path="coco_minitrain_25k/images/val2017/000000449996.jpg",

bboxes=torch.tensor((1.47, 1.45, 638.46, 241.37))) # bounding box of the skyThe result is a mask of the object based on the given bounding box.

result.shapetorch.Size([1, 1, 428, 640])Let's plot an overlay of the mask and image.

import matplotlib.pyplot as plt

from matplotlib.patches import Rectangle

import numpy as np

from PIL import Image

import torch

# Image

image_path = "coco_minitrain_25k/images/val2017/000000449996.jpg"

pil_image = Image.open(image_path)

image_np = np.array(pil_image)

# Bbox

bbox = torch.tensor((1.47, 1.45, 638.46, 241.37)) # Replace with your actual bounding box tensor

xmin, ymin, xmax, ymax = bbox

# Mask

# Squeeze out the first two dimensions to make it 2D

mask_2d = result.cpu().squeeze(0).squeeze(0)

plt.imshow(image_np)

plt.imshow(mask_2d, cmap='jet', alpha=0.5)

plt.gca().add_patch(Rectangle((xmin, ymin), xmax-xmin, ymax-ymin, linewidth=2.5, edgecolor='limegreen', facecolor='none'))

plt.axis('off')

plt.show()

You can also load other variants of SAM from the official SAM repo or even your own custom model.

To do so, download the sam_vit_b weights and the sam_vit_l weights from into your local folder and load them into the constructor as follows.

model = SegmentAnythingModel(model_weights="sam_vit_b_01ec64.pth", model_type="vit_b")

model = SegmentAnythingModel(model_weights="sam_vit_l_0b3195.pth", model_type="vit_l")

Wrap Up

In this tutorial, we showed how you can run Segment Anything Model as a zero-shot segmentation model to enrich your dataset.

This notebook is Part 3 of the dataset enrichment notebook series where we utilize various zero-shot models to enrich datasets.

- Part 1 - Dataset Enrichment with Zero-Shot Classification Models

- Part 2 - Dataset Enrichment with Zero-Shot Detection Models

- Part 3 - Dataset Enrichment with Zero-Shot Segmentation Models

Next UpTry out the Google Colab and Kaggle notebook to reproduce this example.

Questions about this tutorial? Reach out to us on our Slack channel!

VL Profiler - A faster and easier way to diagnose and visualize dataset issues

The team behind fastdup also recently launched VL Profiler, a no-code cloud-based platform that lets you leverage fastdup in the browser.

VL Profiler lets you find:

- Duplicates/near-duplicates.

- Outliers.

- Mislabels.

- Non-useful images.

Here's a highlight of the issues found in the RVL-CDIP test dataset on the VL Profiler.

Free UsageUse VL Profiler for free to analyze issues on your dataset with up to 1,000,000 images.

Not convinced yet?

Interact with a collection of datasets like ImageNet-21K, COCO, and DeepFashion here.

No sign-ups needed.

Updated 7 months ago