Metadata Enrichment with Zero-Shot Classification Models

Enrich your visual data with zero-shot image classification and tagging models such as Recognize Anything, Tag2Text and more.

This notebook is Part 1 of the dataset enrichment notebook series where we utilize various zero-shot models to enrich datasets.

- Part 1 - Dataset Enrichment with Zero-Shot Classification Models

- Part 2 - Dataset Enrichment with Zero-Shot Detection Models

- Part 3 - Dataset Enrichment with Zero-Shot Segmentation Models

PurposeThis notebook shows how to enrich your image dataset using labels generated with open-source zero-shot image classification (or image tagging) models such as Recognize Anything (RAM) and Tag2Text.

By the end of the notebook, you'll learn how to:

- Install and load the RAM and Tag2Text models in fastdup.

- Enrich the your dataset using labels generated by RAM and Tag2Text model.

- Run inference using RAM and Tag2Text model on a single image.

Installation

First, let's install the necessary packages:

- fastdup - To analyze issues in the dataset.

- Recognize Anything - To use the RAM and Tag2Text model.

- gdown - To download demo data hosted on Google Drive.

Run the following to install all the above packages.

pip install -Uq fastdup git+https://github.com/xinyu1205/recognize-anything.git@119a7ae42fb2ce75459cd9107b353bc508460023 gdownTest the installation. If there's no error message, we are ready to go.

import fastdup

fastdup.__version__'1.57'

CUDA Runtimefastdup runs perfectly on CPUs, but larger models like RAM and Tag2Text runs much slower on CPU compared to GPU.

This codes in this notebook can be run on CPU or GPU.

But, we highly recommend running in CUDA-enabled environment to reduce the run time. Running this notebook in Google Colab or Kaggle is a good start!

Download Dataset

Download the coco-minitrain dataset - A curated mini-training set consisting of 20% of COCO 2017 training dataset. The coco-minitrain consists of 25,000 images and annotations.

First, let's load the dataset from the coco-minitrain dataset.

gdown --fuzzy https://drive.google.com/file/d/1iSXVTlkV1_DhdYpVDqsjlT4NJFQ7OkyK/view

unzip -qq coco_minitrain_25k.zipInference with RAM and Tag2Text

Within fastdup you can readily use the zero-shot image tagging models such as Recognize Anything Model (RAM) and Tag2Text. Both Tag2Text and RAM exhibit strong recognition ability.

- RAM is an image tagging model, which can recognize any common category with high accuracy. Outperforms CLIP and BLIP.

- Tag2Text is a vision-language model guided by tagging, which can support caption, retrieval, and tagging.

.](https://files.readme.io/5b84092-ram.png)

Output comparison of BLIP, Tag2Text and RAM. Source - GitHub repo.

1. Inference on a bulk of images

To run inference on the downloaded dataset, you first need to load the image paths into a DataFrame.

import pandas as pd

from fastdup.utils import get_images_from_path

fd = fastdup.create(input_dir='./coco_minitrain_25k')

filenames = get_images_from_path(fd.input_dir)

df = pd.DataFrame(filenames, columns=["filename"])Here's a DataFrame with images loaded from the folder.

| filename | |

|---|---|

| 0 | coco_minitrain_25k/images/val2017/000000314182.jpg |

| 1 | coco_minitrain_25k/images/val2017/000000531707.jpg |

| 2 | coco_minitrain_25k/images/val2017/000000393569.jpg |

| 3 | coco_minitrain_25k/images/val2017/000000001761.jpg |

| 4 | coco_minitrain_25k/images/val2017/000000116208.jpg |

| 5 | coco_minitrain_25k/images/val2017/000000581781.jpg |

| 6 | coco_minitrain_25k/images/val2017/000000449579.jpg |

| 7 | coco_minitrain_25k/images/val2017/000000200152.jpg |

| 8 | coco_minitrain_25k/images/val2017/000000232563.jpg |

| 9 | coco_minitrain_25k/images/val2017/000000493864.jpg |

| 10 | coco_minitrain_25k/images/val2017/000000492362.jpg |

| 11 | coco_minitrain_25k/images/val2017/000000031217.jpg |

| 12 | coco_minitrain_25k/images/val2017/000000171050.jpg |

| 13 | coco_minitrain_25k/images/val2017/000000191288.jpg |

| 14 | coco_minitrain_25k/images/val2017/000000504074.jpg |

| 15 | coco_minitrain_25k/images/val2017/000000006763.jpg |

| 16 | coco_minitrain_25k/images/val2017/000000313588.jpg |

| 17 | coco_minitrain_25k/images/val2017/000000060090.jpg |

| 18 | coco_minitrain_25k/images/val2017/000000043816.jpg |

| 19 | coco_minitrain_25k/images/val2017/000000009400.jpg |

Running zero-shot image tagging on the DataFrame is as easy as:

NUM_ROWS_TO_ENRICH = 10 # for demonstration, only run on 10 rows only.

df = fd.enrich(task='zero-shot-classification',

model='recognize-anything-model', # specify model

input_df=df, # the DataFrame of image files to enrich.

input_col='filename', # the name of the filename column.

num_rows=NUM_ROWS_TO_ENRICH # number of rows in the DataFrame to enrich. Optional.

)

More onfd.enrichEnriches an input

DataFrameby applying a specified model to perform a specific task.Currently supports the following parameters:

Parameter

Type

Description

Optional

Default

taskstr

The task to be performed.

Supports

"zero-shot-classification",

"zero-shot-detection"or

"zero-shot-segmentation"as argument.

No

-

modelstr

The model to be used.

Supports

"recognize-anything-model"or

"tag2text"if

task=='zero-shot-classification'.

No

-

input_dfDataFrame

The Pandas

DataFramecontaining the data to be enriched.

No

-

input_colstr

The name of the column in

input_dfto be used as input for the model.

No

-

num_rowsint

Number of rows from the top of

input_dfto be processed.

If not specified, all rows are processed.

Yes

None

devicestr

The device used to run inference.

Supports

'cpu'or

'cuda'as argument.

Defaults to available devices if not specified.

Yes

None

As a result of running fd.enrich, an additional column 'ram_tags' is appended into the DataFrame listing all the relevant tags for the corresponding image.

| filename | ram_tags | |

|---|---|---|

| 0 | coco_minitrain_25k/images/val2017/000000314182.jpg | appetizer . biscuit . bowl . broccoli . cream . carrot . chip . container . counter top . table . dip . plate . fill . food . platter . snack . tray . vegetable . white . yoghurt |

| 1 | coco_minitrain_25k/images/val2017/000000531707.jpg | bench . black . seawall . coast . couple . person . sea . park bench . photo . sit . water |

| 2 | coco_minitrain_25k/images/val2017/000000393569.jpg | bathroom . bed . bunk bed . child . doorway . girl . person . laptop . open . read . room . sit . slide . toilet bowl . woman |

| 3 | coco_minitrain_25k/images/val2017/000000001761.jpg | plane . fighter jet . bridge . cloudy . fly . formation . jet . sky . water |

| 4 | coco_minitrain_25k/images/val2017/000000116208.jpg | bacon . bottle . catch . cheese . table . dinning table . plate . food . wine . miss . pie . pizza . platter . sit . topping . tray . wine bottle . wine glass |

| 5 | coco_minitrain_25k/images/val2017/000000581781.jpg | banana . bin . bundle . crate . display . fruit . fruit market . fruit stand . kiwi . market . produce . sale |

| 6 | coco_minitrain_25k/images/val2017/000000449579.jpg | ball . catch . court . man . play . racket . red . service . shirt . stretch . swing . swinge . tennis . tennis court . tennis match . tennis player . tennis racket . woman |

| 7 | coco_minitrain_25k/images/val2017/000000200152.jpg | attach . building . christmas light . flag . hang . light . illuminate . neon light . night . night view . pole . sign . traffic light . street corner . street sign |

| 8 | coco_minitrain_25k/images/val2017/000000232563.jpg | catch . person . man . pavement . rain . stand . umbrella . walk |

| 9 | coco_minitrain_25k/images/val2017/000000493864.jpg | beach . carry . catch . coast . man . sea . sand . smile . stand . surfboard . surfer . wet . wetsuit |

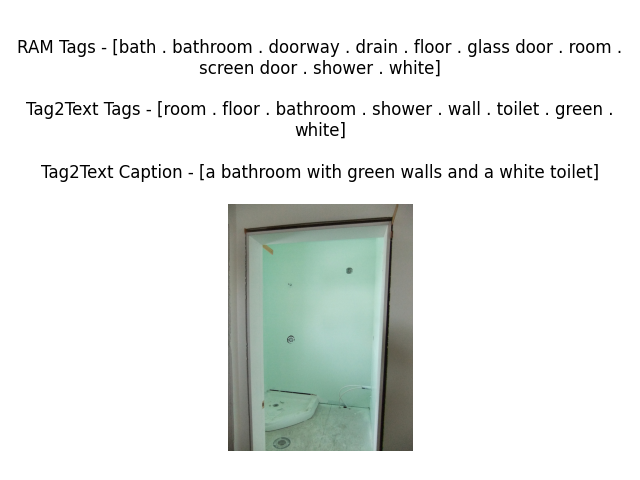

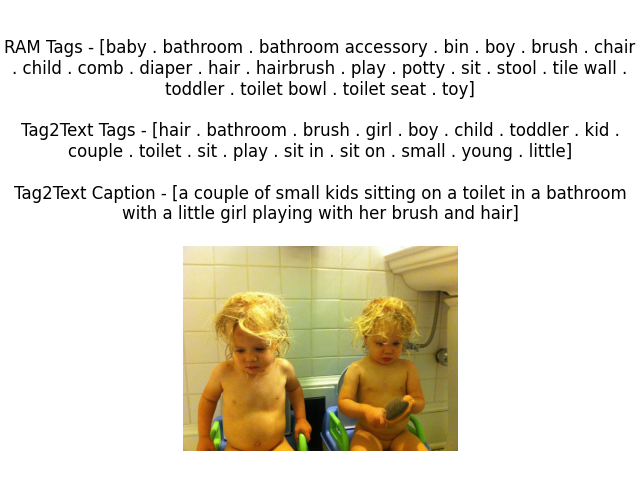

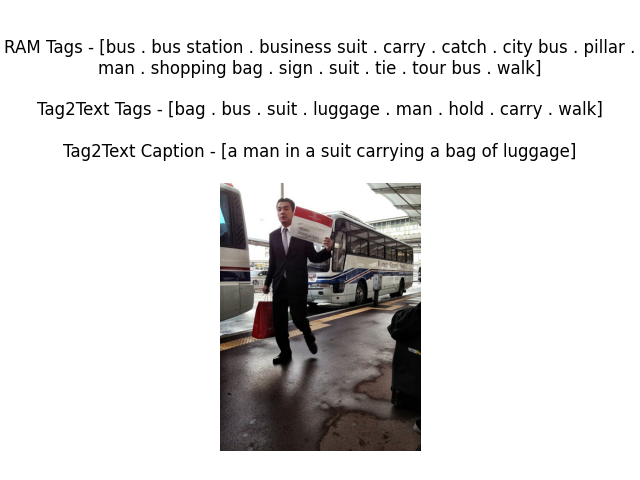

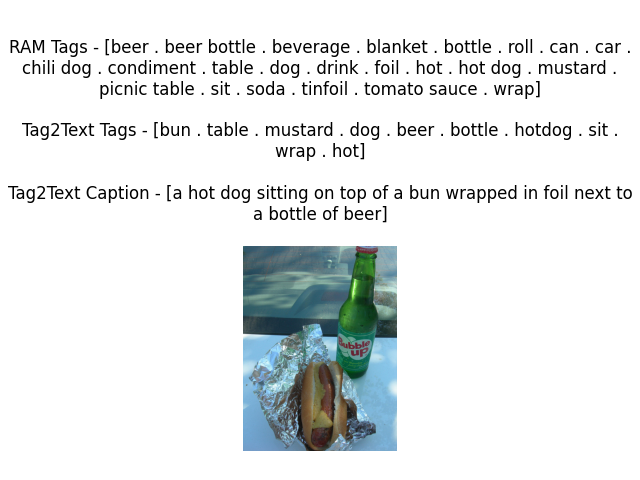

Let's plot the results of the enrichment to see the tags and captions given by the RAM and Tag2Text models.

import pandas as pd

import matplotlib.pyplot as plt

from PIL import Image

# Iterate over each row in the dataframe

for index, row in df.iterrows():

filename = row['filename']

ram_labels = row['ram_tags']

tag2text_labels = row['tag2text_tags']

tag2text_caption = row['tag2text_caption']

# Read the image using PIL

image = Image.open(filename)

# Plot the image

plt.imshow(image)

plt.title(f"RAM Tags - [{ram_labels}]\n\nTag2Text Tags - [{tag2text_labels}]\n\nTag2Text Caption - [{tag2text_caption}]\n", wrap=True)

plt.axis('off')

plt.show()

plt.close()

2. Inference on a single image

We can use these models in fastdup in a few lines of code.

Let's suppose we'd like to run an inference on the following image.

from IPython.display import Image

Image("coco_minitrain_25k/images/val2017/000000181796.jpg")

We can just import the RecognizeAnythingModel and run an inference.

from fastdup.models_ram import RecognizeAnythingModel

model = RecognizeAnythingModel()

result = model.run_inference("coco_minitrain_25k/images/val2017/000000181796.jpg")Let's inspect the results.

print(result)bean . cup . table . dinning table . plate . food . fork . fruit . wine . meal . meat . peak . platter . potato . silverware . utensil . vegetable . white . wine glass

TipAs shown above, the model outputs all associated tags with the query image.

But what if you have a collection of images and would like to run zero-shot classification on all of them? fastdup provides a convenient

fd.enrichAPI to for convenience.

Wrap Up

In this tutorial, we showed how you can run zero-shot image classification (or image tagging) models to enrich your dataset.

This notebook is Part 1 of the dataset enrichment notebook series where we utilize various zero-shot models to enrich datasets.

- Part 1 - Dataset Enrichment with Zero-Shot Classification Models

- Part 2 - Dataset Enrichment with Zero-Shot Detection Models

- Part 3 - Dataset Enrichment with Zero-Shot Segmentation Models

Next UpTry out the Google Colab and Kaggle notebook to reproduce this example.

Also, check out Part 2 of the series where we explore how to generate bounding boxes from the tags using zero-shot detection models like Grounding DINO. See you there!

Questions about this tutorial? Reach out to us on our Slack channel!

VL Profiler - A faster and easier way to diagnose and visualize dataset issues

The team behind fastdup also recently launched VL Profiler, a no-code cloud-based platform that lets you leverage fastdup in the browser.

VL Profiler lets you find:

- Duplicates/near-duplicates.

- Outliers.

- Mislabels.

- Non-useful images.

Here's a highlight of the issues found in the RVL-CDIP test dataset on the VL Profiler.

Free UsageUse VL Profiler for free to analyze issues on your dataset with up to 1,000,000 images.

Not convinced yet?

Interact with a collection of datasets like ImageNet-21K, COCO, and DeepFashion here.

No sign-ups needed.

Updated 7 months ago