Zero Shot Visual Data Enrichment

Enrich your visual data with zero-shot models such as Recognize Anything, Grounding DINO, Segment Anything and more.

fastdup provides a convenient an enrichment API that let's you leverage the capabilities of these models in just a few lines of code.

In this post, we show an end-to-end example of how you can enrich the metadata of your visual using open-source zero-shot models such as Recognize Anything, Grounding DINO, and Segment Anything.

Installation

First, let's install the necessary packages:

- fastdup - To analyze issues in the dataset.

- Recognize Anything - To use the RAM and Tag2Text model.

- MMEngine, MMDetection, groundingdino-py - To use the Grounding DINO and MMDetection model.

- Segment Anything Model - To use the SAM model.

Run the following to install all the above packages.

pip install -Uq fastdup mmengine mmdet groundingdino-py git+https://github.com/xinyu1205/recognize-anything.git git+https://github.com/facebookresearch/segment-anything.git gdownTest the installation. If there's no error message, we are ready to go.

import fastdup

fastdup.__version__'1.51'Download Dataset

Download the coco-minitrain dataset - a curated mini training set consisting of 20% of COCO 2017 training dataset. The coco-minitrain consists of 25,000 images and annotations.

Load Images

First, let's load the dataset from the coco-minitrain dataset folder into a DataFrame.

gdown --fuzzy https://drive.google.com/file/d/1iSXVTlkV1_DhdYpVDqsjlT4NJFQ7OkyK/view

unzip -qq coco_minitrain_25k.zipZero-Shot Classification with RAM and Tag2Text

Within fastdup you can readily use the zero-shot classifier models such as Recognize Anything Model (RAM) and Tag2Text. Both Tag2Text and RAM exhibit strong recognition ability.

- RAM is an image tagging model, which can recognize any common category with high accuracy. Outperforms CLIP and BLIP.

- Tag2Text is a vision-language model guided by tagging, which can support caption, retrieval, and tagging.

.](https://files.readme.io/5b84092-ram.png)

Output comparison of BLIP, Tag2Text and RAM. Source - GitHub repo.

1. Inference on a single image

We can use these models in fastdup in a few lines of code.

Let's suppose we'd like to run an inference on the following image.

from IPython.display import Image

Image("coco_minitrain_25k/images/val2017/000000181796.jpg")

We can just import the RecognizeAnythingModel and run an inference.

from fastdup.models_ram import RecognizeAnythingModel

model = RecognizeAnythingModel()

result = model.run_inference("coco_minitrain_25k/images/val2017/000000181796.jpg")Let's inspect the results.

print(result)bean . cup . table . dinning table . plate . food . fork . fruit . wine . meal . meat . peak . platter . potato . silverware . utensil . vegetable . white . wine glass

TipAs shown above, the model outputs all associated tags with the query image.

But what if you have a collection of images and would like to run zero-shot classification on all of them? fastdup provides a convenient

fd.enrichAPI to for convenience.

2. Inference on a bulk of images

We provide a convenient API fd.enrich to enrich the metadata of the images loaded into a DataFrame.

Let's first load the images from the folder into a DataFrame

import pandas as pd

from fastdup.utils import get_images_from_path

fd = fastdup.create(input_dir='./coco_minitrain_25k')

filenames = get_images_from_path(fd.input_dir)

df = pd.DataFrame(filenames, columns=["filename"])Here's a DataFrame with images loaded from the folder.

| filename | |

|---|---|

| 0 | coco_minitrain_25k/images/val2017/000000314182.jpg |

| 1 | coco_minitrain_25k/images/val2017/000000531707.jpg |

| 2 | coco_minitrain_25k/images/val2017/000000393569.jpg |

| 3 | coco_minitrain_25k/images/val2017/000000001761.jpg |

| 4 | coco_minitrain_25k/images/val2017/000000116208.jpg |

| 5 | coco_minitrain_25k/images/val2017/000000581781.jpg |

| 6 | coco_minitrain_25k/images/val2017/000000449579.jpg |

| 7 | coco_minitrain_25k/images/val2017/000000200152.jpg |

| 8 | coco_minitrain_25k/images/val2017/000000232563.jpg |

| 9 | coco_minitrain_25k/images/val2017/000000493864.jpg |

| 10 | coco_minitrain_25k/images/val2017/000000492362.jpg |

| 11 | coco_minitrain_25k/images/val2017/000000031217.jpg |

| 12 | coco_minitrain_25k/images/val2017/000000171050.jpg |

| 13 | coco_minitrain_25k/images/val2017/000000191288.jpg |

| 14 | coco_minitrain_25k/images/val2017/000000504074.jpg |

| 15 | coco_minitrain_25k/images/val2017/000000006763.jpg |

| 16 | coco_minitrain_25k/images/val2017/000000313588.jpg |

| 17 | coco_minitrain_25k/images/val2017/000000060090.jpg |

| 18 | coco_minitrain_25k/images/val2017/000000043816.jpg |

| 19 | coco_minitrain_25k/images/val2017/000000009400.jpg |

Running a zero-shot recognition on the DataFrame is as easy as:

NUM_ROWS_TO_ENRICH = 10 # for demonstration, only run on 10 rows.

df = fd.enrich(task='zero-shot-classification',

model='recognize-anything-model', # specity model

input_df=df, # the DataFrame of image files to enrich.

input_col='filename', # the name of the filename column.

num_rows=NUM_ROWS_TO_ENRICH, # number of rows in the DataFrame to enrich.

device="cuda" # run on CPU or GPU.

)As a result, an additional column 'ram_tags' is appended into the DataFrame listing all the relevant tags for the corresponding image.

| filename | ram_tags | |

|---|---|---|

| 0 | coco_minitrain_25k/images/val2017/000000314182.jpg | appetizer . biscuit . bowl . broccoli . cream . carrot . chip . container . counter top . table . dip . plate . fill . food . platter . snack . tray . vegetable . white . yoghurt |

| 1 | coco_minitrain_25k/images/val2017/000000531707.jpg | bench . black . seawall . coast . couple . person . sea . park bench . photo . sit . water |

| 2 | coco_minitrain_25k/images/val2017/000000393569.jpg | bathroom . bed . bunk bed . child . doorway . girl . person . laptop . open . read . room . sit . slide . toilet bowl . woman |

| 3 | coco_minitrain_25k/images/val2017/000000001761.jpg | plane . fighter jet . bridge . cloudy . fly . formation . jet . sky . water |

| 4 | coco_minitrain_25k/images/val2017/000000116208.jpg | bacon . bottle . catch . cheese . table . dinning table . plate . food . wine . miss . pie . pizza . platter . sit . topping . tray . wine bottle . wine glass |

| 5 | coco_minitrain_25k/images/val2017/000000581781.jpg | banana . bin . bundle . crate . display . fruit . fruit market . fruit stand . kiwi . market . produce . sale |

| 6 | coco_minitrain_25k/images/val2017/000000449579.jpg | ball . catch . court . man . play . racket . red . service . shirt . stretch . swing . swinge . tennis . tennis court . tennis match . tennis player . tennis racket . woman |

| 7 | coco_minitrain_25k/images/val2017/000000200152.jpg | attach . building . christmas light . flag . hang . light . illuminate . neon light . night . night view . pole . sign . traffic light . street corner . street sign |

| 8 | coco_minitrain_25k/images/val2017/000000232563.jpg | catch . person . man . pavement . rain . stand . umbrella . walk |

| 9 | coco_minitrain_25k/images/val2017/000000493864.jpg | beach . carry . catch . coast . man . sea . sand . smile . stand . surfboard . surfer . wet . wetsuit |

Zero-Shot Detection with Grounding DINO

Apart from classification models, fastdup also supports zero-shot detection models like Grounding DINO (and more to come).

Grounding DINO is a powerful open-set zero-shot detection model. It accepts image-text pairs as inputs and outputs a bounding box.

1. Inference on single image

fastdup provides an easy way to load the Grounding DINO model and run an inference.

Let's suppose we have the following image and would like to run an inference with the Grounding DINO model.

2. Inference on a DataFrame of images

To run the enrichment on a DataFrame, use the .enrich method and specify model=grounding-dino. By default fastdup loads the smaller variant (Swin-T) backbone for enrichment.

Also specify the DataFrame to run the enrichment on and the name of the column as the input to the Grounding DINO model. In this example, we take the text prompt from the ram_tags column which we have computed earlier.

3. Searching for Specific Objects with Custom Text Prompt

Let's suppose you'd like to search for specific objects in your dataset, you can create a column in the DataFrame specifying the objects of interest and run the .enrich method.

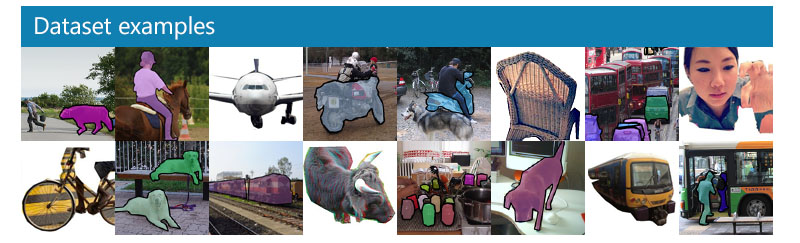

Zero-Shot Segmentation with SAM

In addition to the zer-shot classification and detection modes, fastdup also supports zero-shot segmentation using the Segment Anything Model (SAM) from MetaAI.

SAM produces high quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image.

1. Inference on a single image

To run an inference using the SAM model, import the SegmentAnythingModel class and provide an image-bounding box pair as the input.

2. Inference on a DataFrame of images

Similar to all previous examples, you can use the enrich method to add masks to your DataFrame of images.

In the following code snippet, we load the SAM model and specify input_col='grounding_dino_bboxes' to allow SAM to use the bounding boxes as inputs.

Convert Annotations to COCO Format

Once the enrichment is complete, you can also conveniently export the DataFrame into the COCO .json annotation format. For now, only the bounding boxes and labels are exported. Masks will be added in a future release.

Run fastdup

You can optionally analyze the exported annotations in fastdup to evalute the quality of the annotations.

Visualize

You can use all of fastdup gallery methods to view duplicates, clusters, etc.

Let's view the image clusters.

Wrap Up

In this tutorial, we showed how you can run zero-shot models to enrich your dataset.

Questions about this tutorial? Reach out to us on our Slack channel!

VL Profiler - A faster and easier way to diagnose and visualize dataset issues

The team behind fastdup also recently launched VL Profiler, a no-code cloud-based platform that lets you leverage fastdup in the browser.

VL Profiler lets you find:

- Duplicates/near-duplicates.

- Outliers.

- Mislabels.

- Non-useful images.

Here's a highlight of the issues found in the RVL-CDIP test dataset on the VL Profiler.

Free UsageUse VL Profiler for free to analyze issues on your dataset with up to 1,000,000 images.

Not convinced yet?

Interact with a collection of datasets like ImageNet-21K, COCO, and DeepFashion here.

No sign-ups needed.

Updated 6 months ago